Contributors

Bala Balaiah, Data & Analytics Practice Lead

William Boyd, Data Engineer

This is part 3 of a 3-part blog post about uploading a custom ML script to Sagemaker. If you still need to read part 1 or part 2, in this series you should do so now. Part 3 will cover creating a Lambda script, which will be called by a REST API, which we will also create.

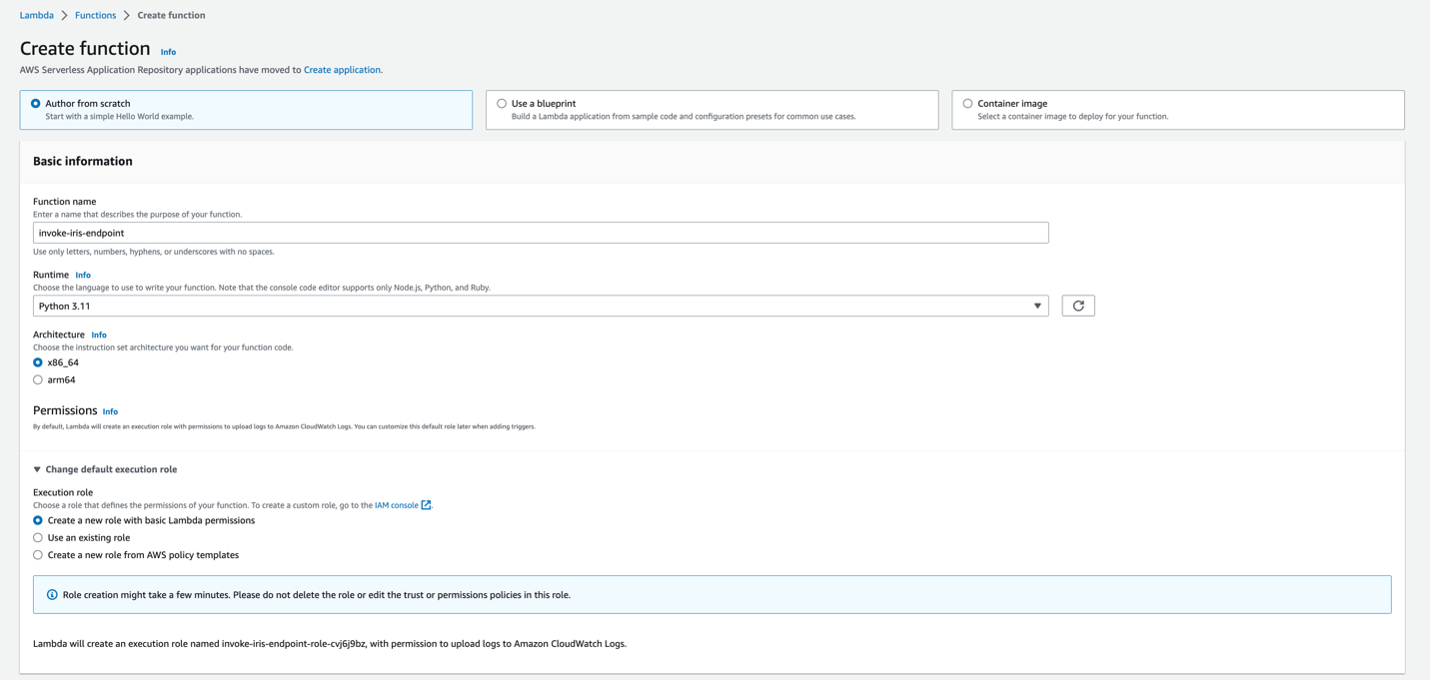

Now, we are going to set up our Lambda API. First, navigate over to Lambda and create a new function. We called ours “invoke-iris-endpoint.” For our role, we will create a basic role with Lambda permissions and add a few policies to it ourselves.

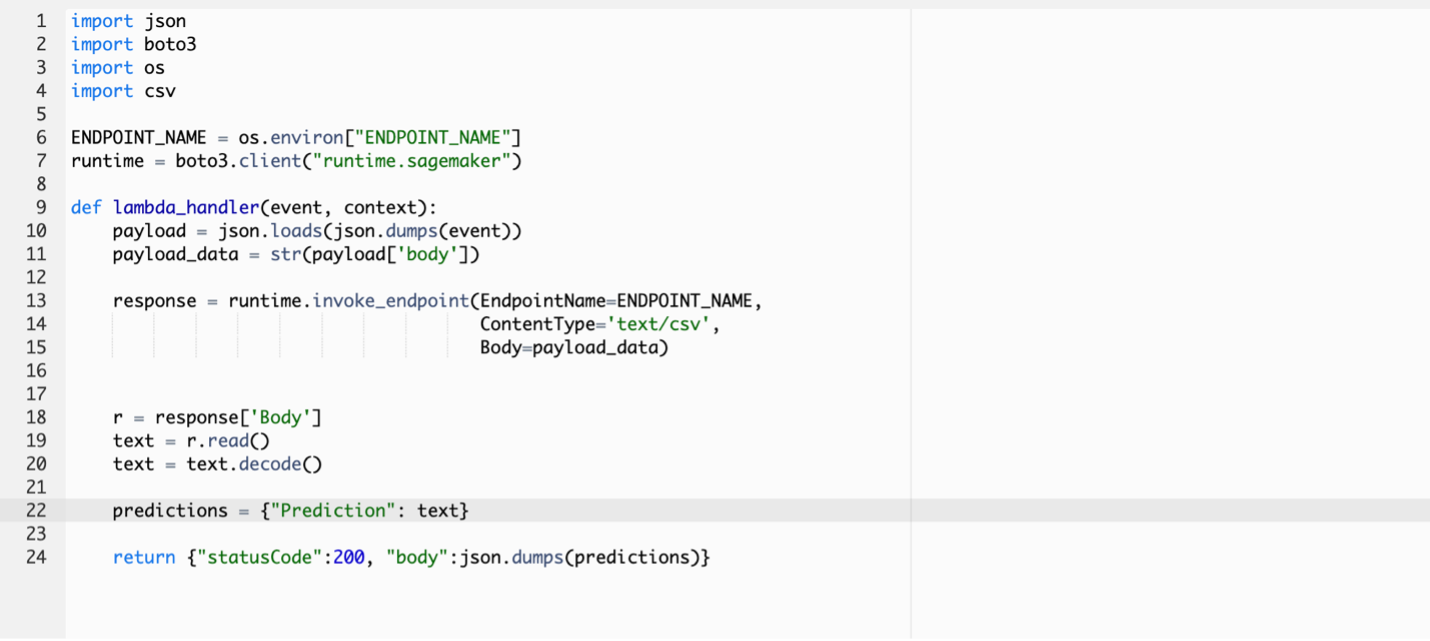

Below will be the code we will use for the lambda function.

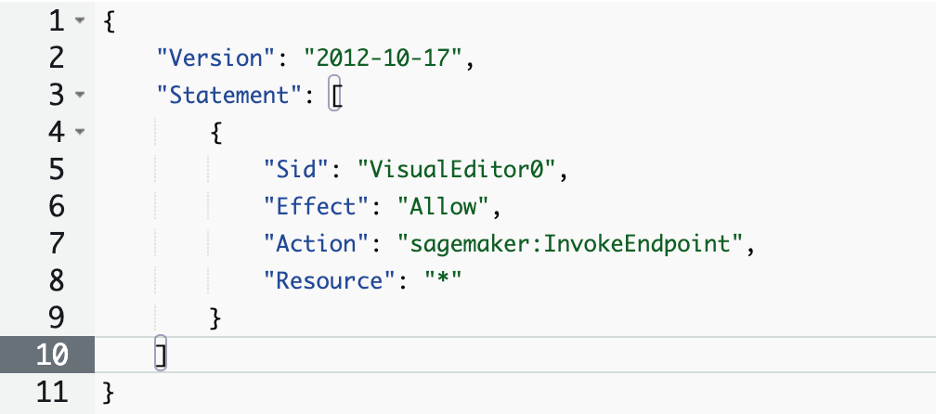

Make sure you hit Deploy before moving on to the next step so that the code saves. Next, we need to add our Endpoint Name environment variable and give our Lambda role extra permission. First, we will navigate to the Configuration tab in Lambda. Then, to Environmental Variables, edit, add an environmental variable, and enter in “ENDPOINT_NAME” for the key and the name of our model endpoint, in our case, “iris-model-endpoint” as the value. Next, go to the Permissions tab and click on the role name. This will take us over to IAM, where we can add policies to this role. Select Add Permissions, then Create inline policy, then select JSON. Then, add the following policy.

Give the policy a name, in our case, “endpoint-invoke-policy,” and create it.

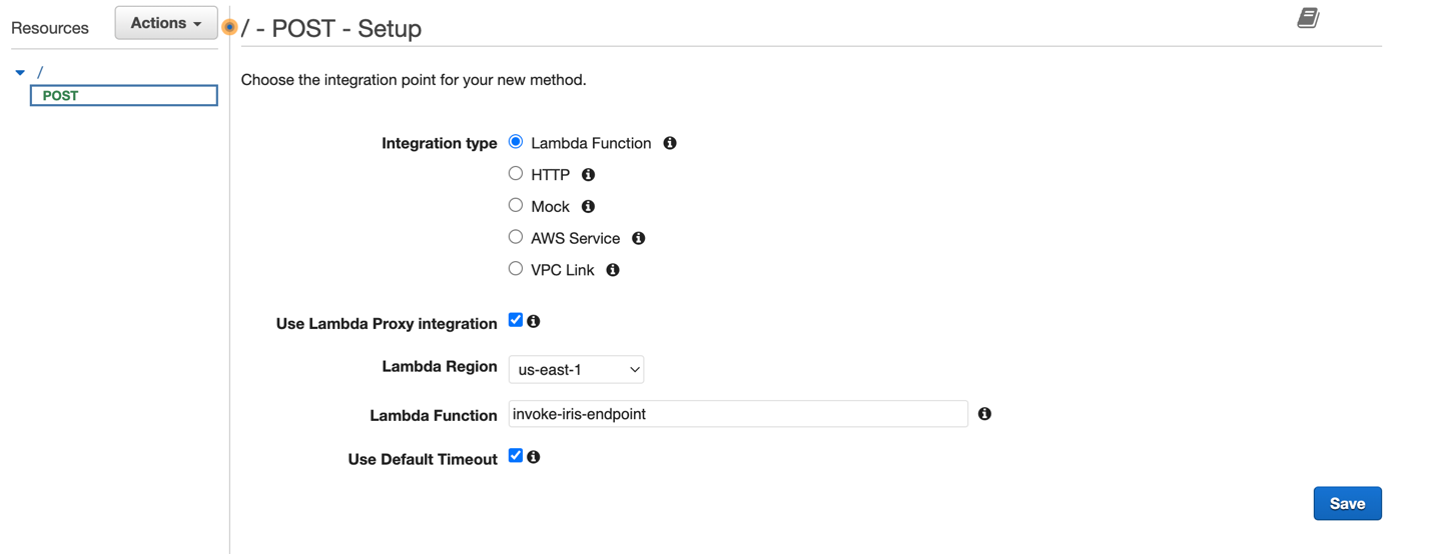

Next, using the AWS Console, navigate to API Gateway. We are going to create a REST API. Under Create New API, select New API instead of Example API. Please give it a name and keep the endpoint as regional. Now, we will walk through the steps of getting our API up and running. Under the Actions menu, create a new method. Then select POST and click on the checkmark. Enter these settings, but change the Lambda Function name to whatever you called yours.

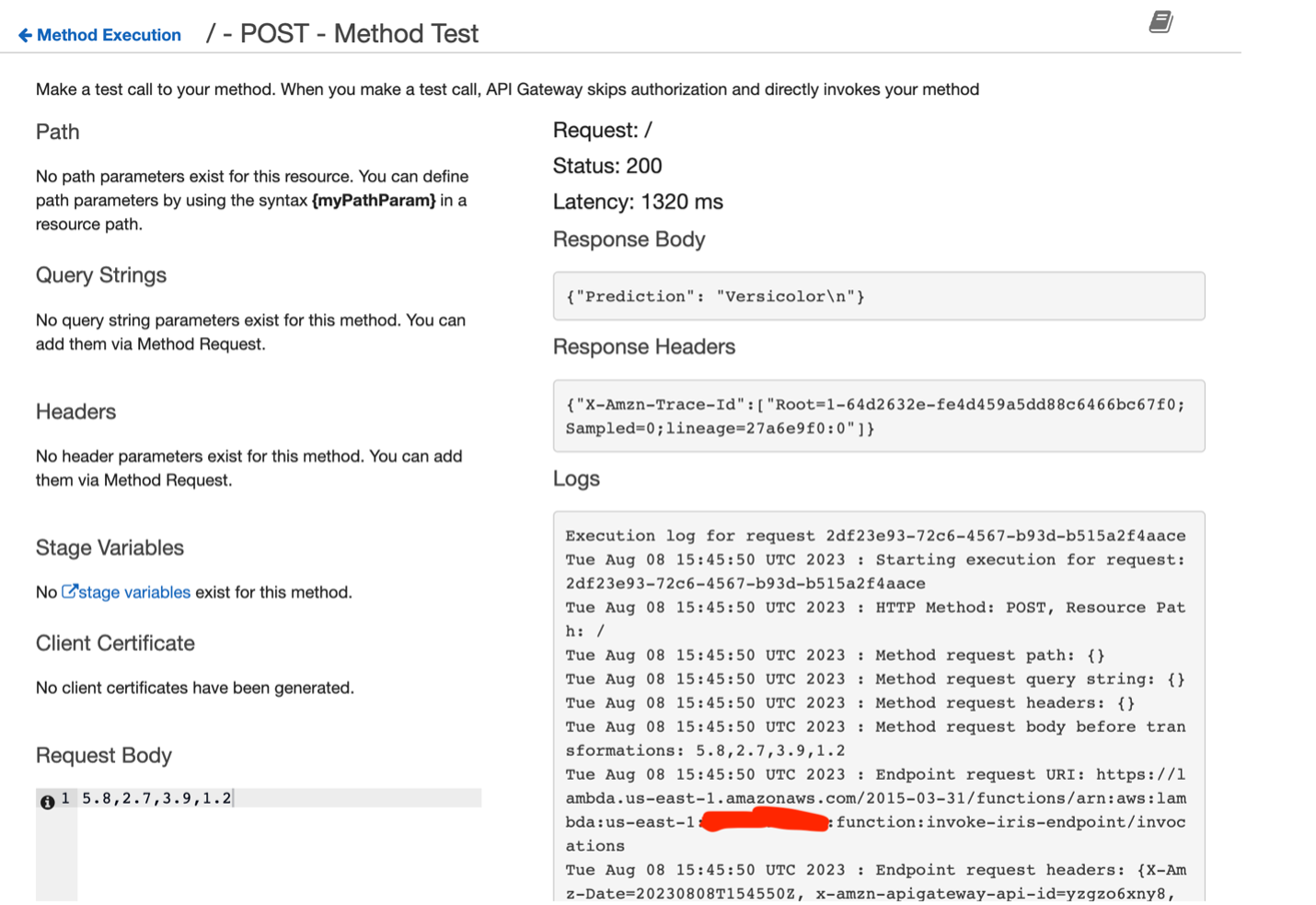

Now that our API is set up, we will test it. After saving the POST method, we should see the word TEST with a lightning bolt. Click that to begin our test. In our request body, we will send a sample data as a CSV. After taking a quick look back at the dataset, we trained our model on, I am going to write in some dummy values that are within the ranges in our data. After entering a set of values in the Request Body section, run the test, and this is what we should get.

Now that our endpoint and API are operational, you can use services like Postman for further testing. This guide outlines the steps of bringing your machine learning algorithm into AWS Sagemaker and gives a clear walkthrough. After the endpoint is created in Sagemaker, there are other options available to set up pipelines and training, but this guide sticks to the basics of the process.

Useful links:

Medium Article: Build and Run a Docker Container for Your Machine Learning Model

Collabnix Article: How to Build and Run a Python App in a Container

AWS Documentation: Pushing a Docker Image

Medium Article: Build Your Own Container with Amazon Sagemaker

AWS Documentation: Creating a Private Repository

AWS Documentation: Train a Model with Amazon Sagemaker

YouTube Video: Sagemaker Tutorial – 2 | Invoke SageMaker Endpoint using Lambda & API Gateway